Overview

This site is a set of topics that teams may find useful while modernising an application from virtual machines into containers.

The goal is not to provide a reference architecture but to compile a set of real world topics that developers may find when moving mature applications to containers.

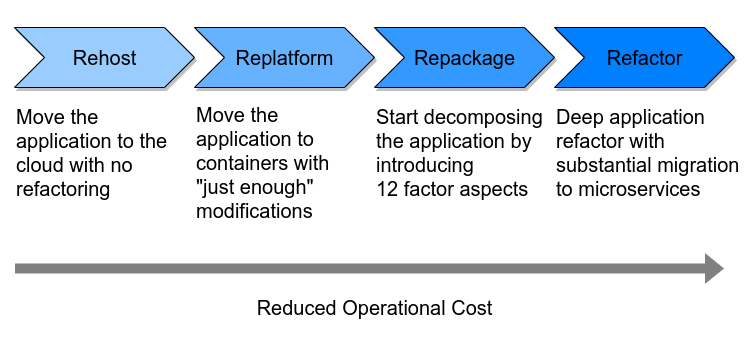

The scenarios are likely to be most relevent to the Replatform and Repackage steps illustrated in the diagram below.

These two steps are the grey areas of modernization that are usually very specific to the application and organisation being modernized and where general guidance isn't clearly mapped by the standard litrature.

Standard Operating Environment

Context and problem

A common anti-pattern is to use a distribution from open source projects as the base image for specific functionality. This can be managable in small deployments but as the amount of deployed containers grow it will get problematic from a dependency and security management perspective.

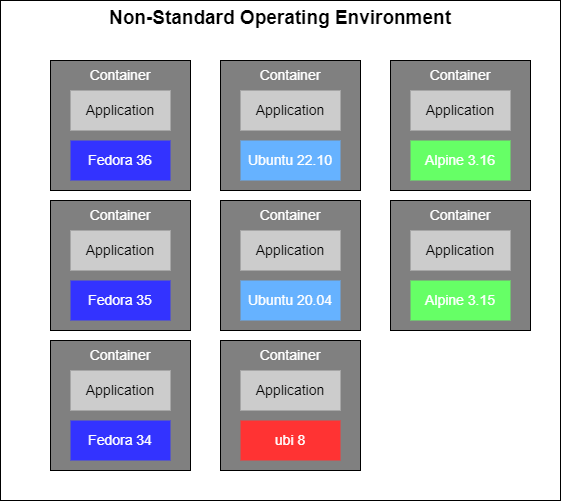

Take for example the following deployment topology.

For example in the above scenario you will have

- 6 Different versions of glibc

- 2 Different versions of muscl

- 8 Different versions of OpenSSL

Solution

The recommendation here is to standardize on a base distribution image so that managing CVEs and building generic tooling for debugging is simpler.

My personal preference is to use Red Hat Universal base images but an experienced team could also target alpine as it has an opinionated security posture that some teams my find useful as well as a distroless approach.

Issues and considerations

Understand how you are going to get support either internally or externally for the base images.

Be concious about the overhead of

When to use this scenario

A standard operating environment initiative should be embarked on when starting the Replatform phase. As the number of base operating systems types grows beyond 5 consider standardising.

Example

As well as targeting a single distribution from a vendor organisations may also want to build a base image internal to their organisation with the following benefits:

- Userspace libraries, runtimes etc are consistent

- Improved inner dev loop with shorter docker builds

- Easier to update and integrate with build on change processes

See the Dockerfiles in the scenario folder for a reference implementation.

orgbase.Dockerfile is the organisational base image.

In this example the base image is built with

podman build -t quay.io/mod_scenarios/orgbase -f orgbase.Dockerfile

# Create an organisational base image from a vendor release.

FROM registry.access.redhat.com/ubi8/ubi-minimal

# Update it with a specific configuration.

# ubi-minimal uses `microdnf` a small pkg management system.

RUN microdnf update && microdnf install -y procps-ng

app.Dockerfile is the image that will be deployed.

# Use the organisation base image

FROM quay.io/mod_scenarios/orgbase

# this is just a simple example but you may want to integrate

# this orgbase image approach with the streamline-builds scenario

ENTRYPOINT [ "while true; do ls /root/buildinfo/; sleep 30; done" ]

Related resources

Streamline Builds

Ensure only the required assets are deployed in a container image.

Context and problem

In order to make images as small as possible and minimise the impact of security risks it's important to use a minimal base image that you build up with capabilities.

Solution

Use the container builder pattern

This scenario consists of a Dockerfile that builds a Rust project with external SSL dependancies and the configures a minimal image to use it.

A working reference is available in the repo for this project.

Issues and considerations

While a single base image is the desired goal it may be necessery to use more than one base image.

Ensure security scans of the images and a process to manage them is in place.

Understand how you are going to support the OS layer and related system libraries.

When to use this scenario

As you move into the Replatform stage of modernization it is good practice to put this scenario in place.

Example

###############################################

# This is the configuration for the container

# that will be build the assets for deployment

###############################################

# Start with a standard base image See the Standard Operating Environment folder for more details.

FROM registry.access.redhat.com/ubi8/ubi as rhel8builder

# Example of installing development libs for the build

RUN yum install -y gcc openssl-devel && \

rm -rf /var/cache/dnf && \

curl https://sh.rustup.rs -sSf | sh -s -- -y

COPY . /app-build

WORKDIR "/app-build"

# Set up build paths and other config

ENV PATH=/root/.cargo/bin:${PATH}

RUN cargo build --release

########################################################################

# This is the configuration for the container #

# that will be distributed. #

# You may also want to consider using an organisational base image. #

# See the standard operating environment folder #

########################################################################

FROM registry.access.redhat.com/ubi8/ubi-minimal

# ubi-minimal uses `microdnf` a small pkg management system.

RUN microdnf update && microdnf install -y procps-ng

# Add a group and user call `appuser`

RUN addgroup -S appuser && adduser -S appuser -G appuser

WORKDIR "/app"

COPY --from=rhel8builder /app-build/target/release/stream-line-builds ./

# set the user that will run the application

USER appuser

CMD ["./stream-line-builds"]

Related resources

Standard Operating Environment

dependency injection

Context and problem

Dependency injection (DI) or "well known plugins" are a common and robust pattern in customization scenarios in the enterprise.

It's a pattern that has been used by ISVs to enable thier down stream users to customize products.

This can present challanges as ISVs modernize to containers because the ISVs want to deliver software in immutable containers but the requirement to customize by including libraries is still a concrete requirement.

Solution

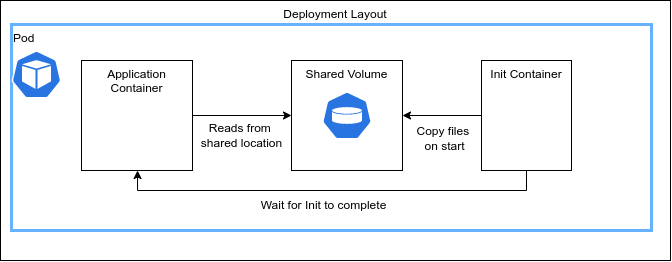

Configure the application container at start up with an init container.

The sample defines a disk volume that enables config to be passed from an initialization container to the main application container.

This scenario provides a pod definition a starting point that can be extended to meet the requirements.

In production this should be a persistent volume claim and NOT a hostPath as deployments will clobber each other

Issues and considerations

Loading libraries and config from an external source may require managing PATH priorities and loading orders that could potentially be brittle.

Areas of responsibility between vendor and client can become blurred although no worse that the virtual machine scenario.

When to use this scenario

This pattern is probably most relevent when moving from Rehost to Replatform.

Use this approach when you have additional configuration that needs to be deployed on a per container basis.

Example

apiVersion: v1

kind: Pod

metadata:

name: di-pod

labels:

app.kubernetes.io/name: DiApp

spec:

containers:

# The main container that echos the file created by the init container.

- name: app-container

image: registry.access.redhat.com/ubi8/ubi-minimal

command: ['sh', '-c', 'cat /usr/share/initfile && sleep 3600']

volumeMounts:

- name: shared-data

mountPath: /usr/share

initContainers:

# A simple init container that echos a file into the shared data folder on startup

- name: init-deps

image: registry.access.redhat.com/ubi8/ubi-minimal

volumeMounts:

- name: shared-data

mountPath: /pod-data

command: ['sh', '-c', "echo Hello from the init container > /pod-data/initfile"]

# defines a volume to enable config to be passed to the main container

# In production this should be a persistent volume claim

# NOT a hostPath as deployments will clobber each other

volumes :

- hostPath:

path: /tmp/di

type: Directory

name: shared-data

To run the scenario locally make sure you have podman installed and run the following commands:

-

Create a folder to match the volume definitions

mkdir /tmp/di -

Play the pod definition with podman

podman play kube pod.yaml -

Inspect the logs to see the main container has access to the file generated by the init container.

podman logs di-pod-app-container Hello from the init container! -

Clean up

podman pod stop di-pod podman pod rm di-pod rm -fr /tmp/di

Related resources

Standalone Podman

Context and problem

The first step a lot of organizations take when moving from virtual servers to containers is to run a single container within a virtual server instance using a container runtime such as docker or podman.

This may seem an unneccessery overhead it provides a path for the software development team to use containers in dev/test and in the continuous integration process while not putting to much burden on the operations organisation.

While it's not an ideal scenario as the orchestration tooling around containers is one of the key benefits it does enable an organisation to break up the modernization journey into achievable goals and doesn't require a larger organisational transformation.

Solution

The selection of a container management system is dependant on whether an organisation intends to eventually adopt kubernetes or not. If kubernetes is an end goal then it makes sense to target podman as it has built in support for defining collections of containers in pods in exactly the same way as kubernetes and this will help smooth the transition later in the journey.

Issues and considerations

Organisations should be aware that most of the time this is an interim step and should be concious not to start building out a custom container orchestration system when moving to kubernetes would be the better option.

Also consider the refactoring required for observability and CI/CD if this is a substantial peice of work it may make sense to target kubernetes directly or at least ensure the changes align with the target architecture in the Refactor step.

Managing pods with systemd and podman is an evolving space so expect some changes as podman evolves.

The podman generate systemd --files --name approach had a some rough edgeds and configuring a system that could survive a reboot was never achieved with this approach. It is strongly advised to use the systemctl --user start podman-kube given below unless there are some specific circumstanses that prevents this.

When to use this scenario

In the early stages of moving from Rehost to Replatform

Example

Prerequisits

- Systemd compatible distribution

- Podman

Test on

-

Ubuntu 22.04 - podman 4.3.0

-

Fedora 36 - podman 4.2.1

Steps

- Create a file called

top.yamland add the following content

apiVersion: v1

kind: Pod

metadata:

labels:

app: top-pod

name: top-pod

spec:

restartPolicy: Never

containers:

- command:

- top

image: docker.io/library/alpine:3.15.4

name: topper

securityContext:

capabilities:

drop:

- CAP_MKNOD

- CAP_NET_RAW

- CAP_AUDIT_WRITE

resources: {}

-

Create the escaped configuration string

escaped=$(systemd-escape $PWD/top.yaml) -

Run the yaml file as a systemd service

systemctl --user start podman-kube@$escaped.service -

Generate the service config from the running instance to persist reboots.

systemctl --user enable podman-kube@$escaped.service -

Reboot you machine to confirm the service survives reboots.

Additional Notes

The systemd user instance is killed after the last session for the user is closed. The systemd user instance can be started at boot and kept running even after the user logs out by enabling lingering using

loginctl enable-linger <username>

Related resources

Microtemplates with Helm

Context and problem

The ability to configure containers with external definitions is a key part of 12 factor apps. As organisations adopt containers these external definitions tend to travel as shell scripts included in the code repository. These shell scripts become unwieldy quickly and have to be rewritten when an organisation moves to an orchestration platform.

Solution

Adopt a template engine early in the modernization cycle can help mitigate the problem of shell scripts and prepare an organisation for the move towards kubernetes.

Issues and considerations

Helm as a dependancy may require additional training for the organisation.

When to use this scenario

Helm is useful from Replatform point of the journey onwards. This microtemplating option is targeting the developer loop and is especially useful at the start of the Replatform roadmap.

Example

apiVersion: v2

name: top-helm-app

type: application

version: 0.1.0

appVersion: "1.16.0"

- Create folder called

templatesand create file calledpod.yamlwith the following content

apiVersion: v1

kind: Pod

metadata:

labels:

app: top-helm-pod

name: top-helm-top-pod

spec:

restartPolicy: Never

containers:

- command:

- top

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

name: top-helm-container

resources: {}

- Create a file called

values.yamlwith the following content

image:

repository: docker.io/library/alpine

tag: "3.15.4"

-

Validate the pod template is generated correctly by running

helm template .helm template . --- # Source: top-helm-app/templates/pod.yaml apiVersion: v1 kind: Pod metadata: name: top-helm-pod spec: containers: command: - top name: top-helm-container image: "docker.io/library/alpine:3.15.4"

Next steps

-

Run the file with podman

helm template . | podman play kube - -

Delete the pod

podman pod stop top-helm-top-pod podman pod rm top-helm-top-pod

Related resources

WASI Containers

End to end demonstration of running WASI in a OCI compliant container runtime using crun on fedora 37

Context and problem

In a resource constrained environments such as edge locations or in sustainability focused organisations the benefits of containers need to be refined to make the images as small as possible, improve startup times and minimise the energy used for execution.

Solution

Use a minimal container runtime environment written in a high performance language that only requires a scratch based image but still supports multiple languages.

Issues and considerations

WASI is still a maturing standard with networking and device integration still in the early stages of development.

When to use this scenario

Use this scenario when you have environments with limited resources such as edge or when you need fast container start up times.

Example

See the folder https://gitlab.com/no9/modernization-scenarios/-/tree/main/scenarios/wasi-containers for the full source code.

prereqs

First you need to install podman wasmedge and buildah.

sudo dnf install podman wasmedge buildah

Then you need to build and install crun a minimal container runtime with WASMEdge support.

git clone https://github.com/containers/crun.git

cd crun/

./autogen.sh

./configure --with-wasmedge

make

mv /usr/bin/crun /usr/bin/curn.backup

sudo mv /usr/bin/crun /usr/bin/curn.backup

sudo mv crun /usr/bin

Now install rust with the WASI target.

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

rustup target add wasm32-wasi

create the source files

Create a new folder called wasi-containers

mkdir wasi-containers

Add the crate configuration

[package]

name = "wasi-containers"

version = "0.1.0"

edition = "2021"

Add a src folder and create a simple program that prints the environment variables.

fn main() { println!("Hello, world!"); use std::env; // We will iterate through the references to the element returned by // env::vars(); for (key, value) in env::vars() { println!("{key}: {value}"); } }

Finally create a Dockerfile in the root folder of the project which copies the wasm file into an empty container.

FROM scratch

COPY target/wasm32-wasi/release/wasi-containers.wasm /

CMD ["./wasi-containers.wasm"]

build

Build the Dockerimage

cargo build --release --target wasm32-wasi

buildah build --annotation "module.wasm.image/variant=compat" -t mywasm-image

run

podman run -e LIFE="GOOD" mywasm-image:latest

outputs

Hello, world!

PATH: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

TERM: xterm

container: podman

LIFE: GOOD

HOSTNAME: ad0c6217d785

HOME: /